Power your brand’s virtual AI assistant with Adobe Experience Manager (AEM) content

The AI revolution is underway, with businesses and industries of all kinds racing to develop ways to leverage the power of generative AI. A lot of the public discourse is centered around content creation, with headlines dominated by Adobe Firefly generating pixel-perfect AI art, or ChatGPT drumming up compelling marketing copy. But there’s another path that has been less discussed, and that is how ChatGPT or any other large language model (LLM) can be used to build the next generation of virtual assistants to guide users through the digital universe and take your user experience to the stars.

Recently, we at Cognizant Netcentric built our own virtual (space) travel assistant called Solari, and showcased it at the Adobe Summit EMEA 2023. Solari is a data-enhanced chatbot that proactively guides users through a conversation, revealing their unique travel preferences and ultimately recommending several personalized travel packages within our solar system. It is an integration of Cognizant Netcentric’s conversational AI solution with Adobe Experience Platform and Adobe Journey Optimizer technologies.

For experience makers, it showcases how brands can use this kind of search solution to gather higher quality first party data from their customers than ever and activate it across their customer journeys unlocking true personalization at scale. Solari showcases just one way combining Adobe Experience Cloud capabilities with the experience of a powerful, intuitive virtual assistant can unlock CX opportunities across products and industries.

Here’s how we did it, and what we learned:

Conversations: the new way to search

Until today, search solutions have prevailed as the way for us to find the information and products we’re after. So what’s different about using a conversational interface like Solari, our virtual AI assistant?

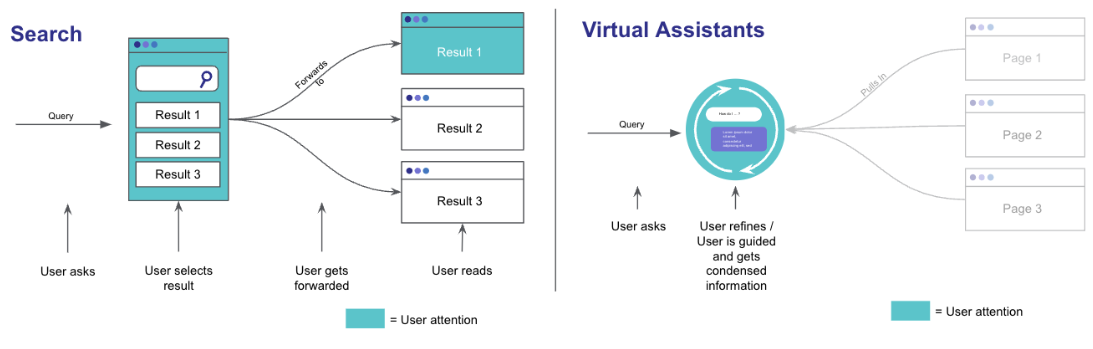

While search solutions act like a map or directory, presenting users with a list of relevant results like pages, documents, or products, conversational assistants take it one step further by bringing the information to the user in a condensed, digestible manner. Users don’t have to navigate further and comb through content to find what they’re looking for. A virtual assistant can personalize and summarize the content in a way that’s tailored to the user’s prompt. All this without the need of understanding a technical interface - users can interact with the content intuitively by natural language.

Virtual assistants represent a world of opportunity to create great user experience for websites and applications, and are naturally replacing search solutions in some use cases already.

Search solutions won’t disappear overnight – they’re handy, and present another kind of browsing experience. If we think about online shopping, of course it’s great to be recommended the perfect shirt, but you might also want to browse around to get inspiration for your entire outfit too. Search engines built the technical foundation for virtual assistants, after all.

The fact remains that virtual assistants are a great asset for their ability to not only provide information, but to guide users. Going on an interstellar space journey? A virtual assistant can offer contextual help, providing translations so you can understand what Jar Jar Binks is saying, or reminding you to bring a towel.

Virtual assistants can proactively ask for users' preferences in a conversation, like you would do in a local travel agency and reveal their preferences and needs. This enables the virtual assistant to provide relevant and personalized recommendations without knowing the user upfront. Virtual assistants thus guide users throughout their journey and get meaningful insights that enhance the users profile and allow further advanced personalisation use cases.

Virtual assistants need content to do their job

How does a virtual assistant gather the knowledge we want them to have, so they know how to assist us? The key is content, and here’s the good news: you can create and manage it right in AEM.

When using large language models like ChatGPT, one challenge businesses face is in getting it to work with their own content. ChatGPT can generate answers based on content provided alongside a user’s query. So, the most simple way would be to send the context that you want ChatGPT to generate the answers based on alongside your query. This approach has two major downsides:

- Uploading all the required content for each request is wholly inefficient, and not scalable.

- ChatGPT prompts are limited to an input of 4000 tokens (words) as of now. If your content exceeds that, this approach doesn’t work.

Microsoft, an OpenAI partner and investor, has released a solution to address this problem: using search engines to preselect relevant documents, that can then be used by ChatGPT to generate the answer. Search engines have the necessary linguistic and natural language processing retrieval tools to select the most relevant documents and pages for a user’s query. These results can then be used by ChatGPT or other LLMs to generate answers.

Leveraging AEM to build better virtual assistants

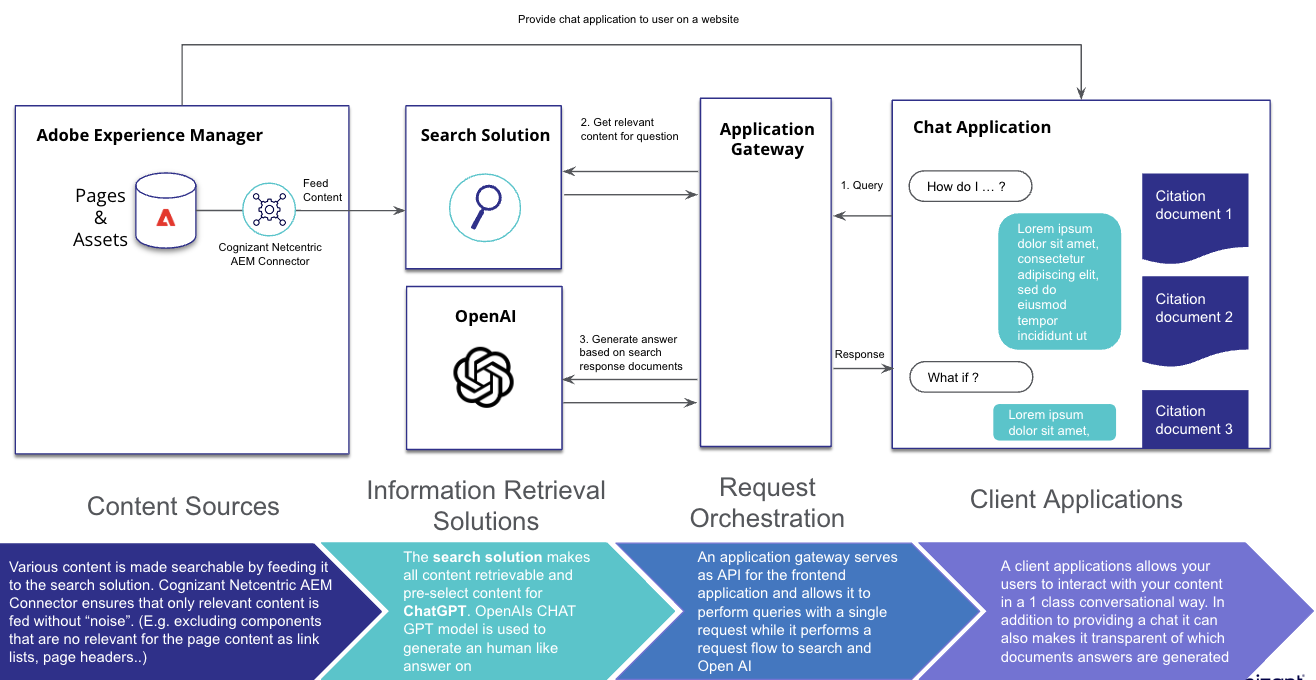

You can use Adobe Experience Manager to author, host, and publish the content that is used for a conversational chat application. It can also host the chat application, so users can access it.

Cognizant Netcentric built an AEM Connector that sends content from AEM to a search solution, which then makes the documents available for searching. There’s an application gateway that can be a microservice, serverless function, or similar, that is used to orchestrate requests from the end user on the chat application. When the application gateway receives a query from the chat application, it performs two steps:

- Retrieve relevant content for the user's query using the search solution

- Let ChatGPT (or another LLM) generate the response to the users' query from the previously retrieved content, e.g.: “Please generate the answer for this user question <users query> using this text <results from step 1> as a source to generate the answer.

There are multiple benefits of using this approach, mostly to do with your control over the content:

- Content used for answers stays within your control; it doesn’t need to be uploaded/synced with ChatGPT.

- Permission-sensitive answers: While retrieving relevant content, you can control whether users have permission to view it. Depending on the permissions, the document can be used to generate the answer or be excluded from the search.

- Neither the user input nor your content is used to train the public ChatGPT model.

- Cognizant Netcentric AEM Connector is a ready-to-use integration solution to bring content to any search backend, meaning short implementation times for high quality results.

- This approach supports multiple search technologies. While it can use Microsoft’s excellent Azure Cognitive Search as the search solution, you can also use other search engine technologies.

How Cognizant Netcentric’s AEM Connector can help you

Our AEM Connector provides first-class integration between AEM environments and search solutions in AEM On-Premise, AEM as a Cloud Service and Adobe Edge Delivery Services (Next-Gen Composability) environments. The key advantage and differentiator of our AEM Connector is its ability to specifically select content to export to search engines. This ensures that only relevant content is used to generate answers. Even ChatGPT has its limits; it can’t generate good answers with poor content. Our AEM Connector exports relevant content in real time, meaning the most recent content is instantly available to build answers without the help of a crawler. This is a head start for businesses who are racing to be the first to take their users along a digital journey.

Looking towards the future: Transforming Enterprise Experiences with Conversational AI

The future of conversational AI solutions for enterprise is full of potential. Enhancing AI chatbots and virtual assistants with content powered by Adobe Experience Manager (AEM), PIM system, or other sources, businesses can enhance their customer experiences and drive engagement like never before. Conversational AI applications integrated with AEM and AEP enable personalized interactions, streamlined content delivery, and proactive customer support, revolutionizing the way enterprises connect with their audience. As conversational AI continues to evolve, we can expect even more sophisticated virtual assistants that seamlessly integrate with AEM, enabling enterprises to deliver highly tailored and efficient user experiences.

Cognizant Netcentric is your partner

Optimizing information retrieval is one of the most important factors to building an excellent virtual assistant. Cognizant Netcentric has over 10 years of experience in this domain, building tailored, state-of-the-art search solutions. We bring standardized solution blueprints, project accelerators like the AEM Connector to integrate AEM from the start, and a dedicated Applied Intelligence Community where experts share best practices in AI, information retrieval, and search solutions. We’re able to guide organizations end-to-end on building a virtual assistant for their website.

Get in touch with our team today to start transforming the way your users experience your content.