How to get the most out of your cloud infrastructure: AEM on AWS

Netcentric has a long history of operating Adobe Experience Cloud (AEC) infrastructure for its clients, specifically Adobe Campaign (AC) and Adobe Experience Manager (AEM). Throughout recent years of change within the IT industry, cloud infrastructure has become more present and accepted. Therefore, when the request came to migrate a large AEM based web portal to a new infrastructure setup, the selection process was straightforward. Back in 2016, the choice was clear for AWS, as the services offered were well-established and AWS provided all needed services in the cloud.

Only a month passed between initiating the project and the migration, and it was a complete success for our client. The migration took only a few days. Today, not much has changed. We still migrate AEM platforms into AWS and each year, multiple migrations are executed for our clients. Some are less complex, while others more so.

To leverage the most out of your infrastructure you must follow a DevOps approach. Automating as much as possible while still keeping it maintainable. This is sometimes a challenge but with tools such as Puppet (https://github.com/bstopp/puppet-aem), Apply Tool (https://github.com/Netcentric/apply-server) and others such as Jenkins or Bamboo, we can automate most of the daily routines to a high level of satisfaction. In particular, rolling deployments for the Publish instances or even a blue green deployment approach can be achieved by leveraging such tools.

Blueprint AEM AWS architecture

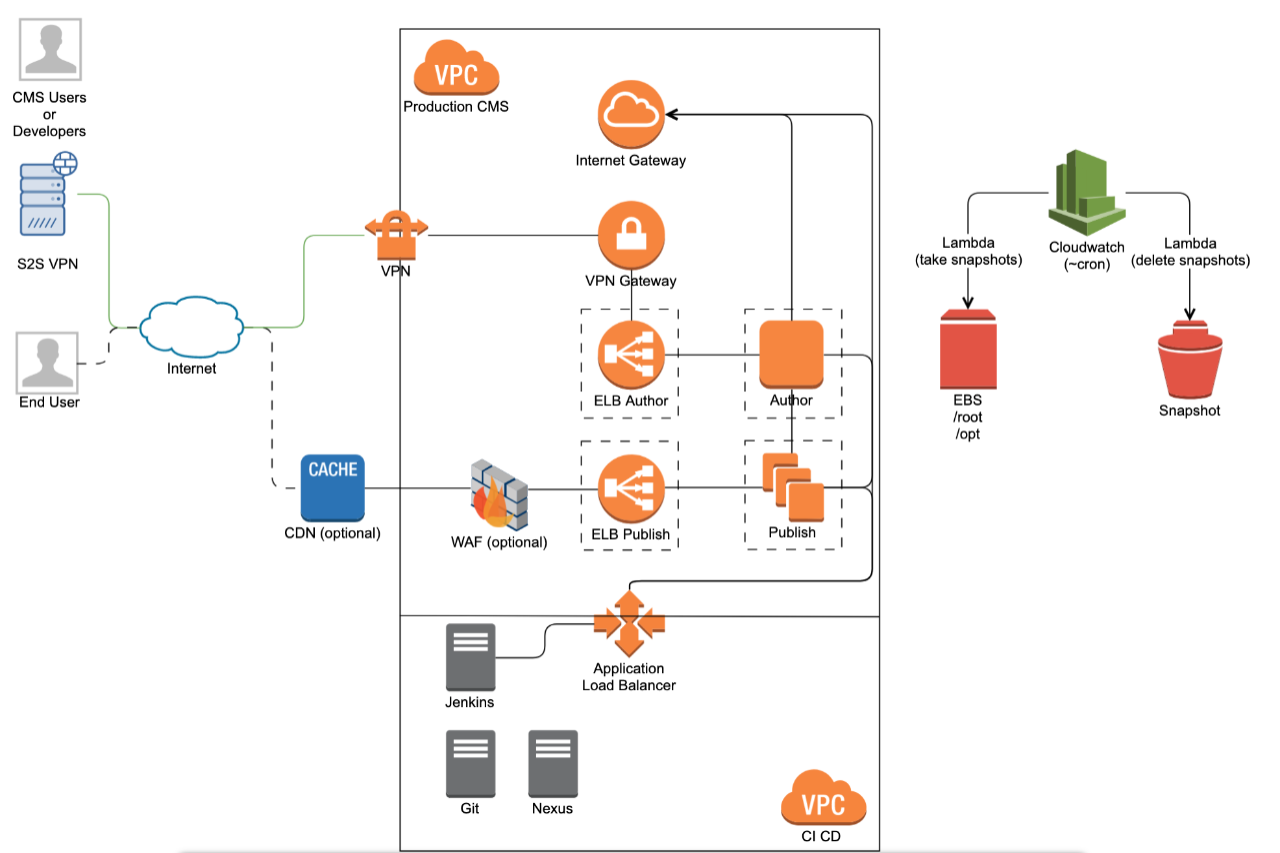

The architecture chosen in this example is a very pragmatic approach that allows for the highest flexibility whilst incorporating all standard security demands.

Diagram 1: AWS AEM basic blueprint

AEM Layout

The most basic AEM architecture with redundancy we promote is a classical one author two publish instance setup where the two Publish are distributed across two availability zones. For simplicity reasons, we tend to run the Apache HTTPD web server on the same instance. From a performance perspective, this has minimal impact.

A more sophisticated setup can leverage a content sharding method in which two authors run with the same deployed release and sync, if there is, master content from one to another. All other content is not shared, but both Author instances replicate to the same three publish instances. This allows to gain performance on the Author side and enable scaling whilst also dedicating an author system per time zone that allows for bigger maintenance windows when needed.

Security

Living in the cloud always raises questions about security. Being in the cloud isn’t the only security topic; there’s also the question of how to implement proper zoning. The zoning itself is no different than in a classical datacenter, but one can make use of load balancers very efficiently and should leverage security groups to further segregate network connections where needed. For more details a first stop should always be the AWS own documentation: https://docs.aws.amazon.com/en_en/vpc/latest/userguide/vpc-network-acls.html .

Zoning and segregation

A two zone concept is a good practice to apply for AWS based setups with a non production and a production Virtual Private Cloud (VPC). Each environment in the VPC can then be further segregated through security groups and each EC2 instance is only accessible via an internal IP. Making use of private subnets (for the instances) and public subnets (for the ELBs) is the best and safest approach. No instance is externally exposed; just through the load balancer and usually https. This allows for a very high level of protection against potential threats. If the necessity exists for outgoing fixed IPs for integrations a NAT GW, Network Address Translation Gateway, is deployed for instances to connect outside. This becomes a necessity when required to integrate using some kind of IP whitelisting.

Accessing the instances

Access to the instances is always a topic of security. In general, deployment of a jump host in each VPC, managed through Puppet, which configures the ssh keys of users we have stored in our repository, is a simple but effective approach to providing controlled access. Besides this, whitelisting of IP addresses is applied for those jump hosts. These hosts are the only instances with public IPs besides the NAT GWs.

Deployments

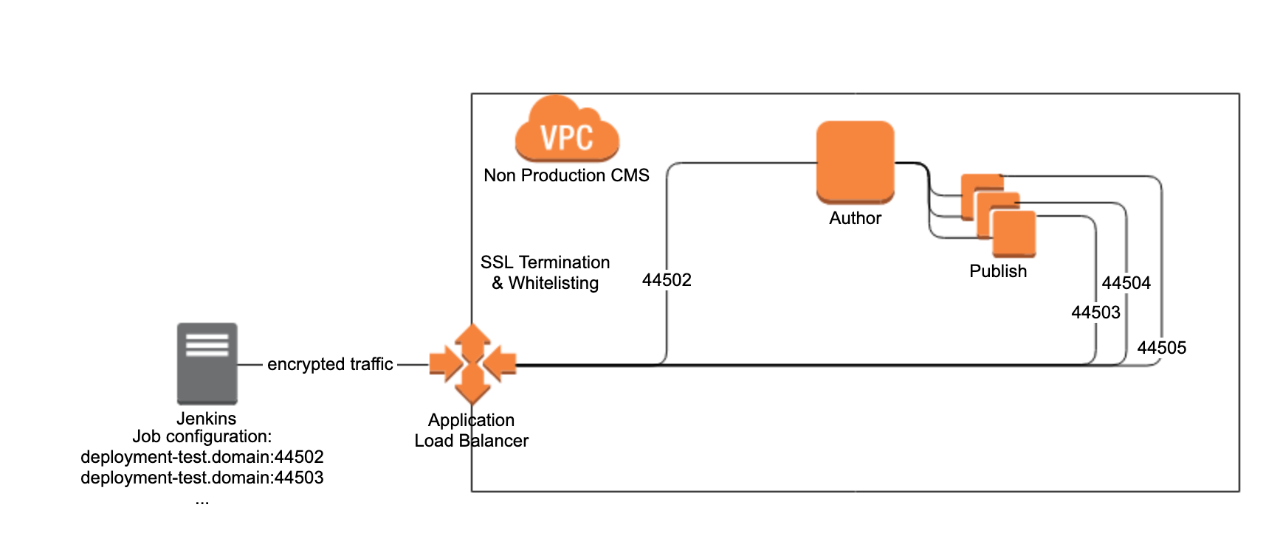

Now you may ask: how does one deploy to such cloud based instances? Leveraging application load balances (ALB) is the most trivial way. With the use of different target groups, one port per instance, and whitelisting of IPs, we can permit external access by IP via HTTPS to deploy to our AEM directly. Tools such as the Netcentric Apply Tool can be used for remote administration, but more on this in Part 2 of the blog!

Diagram 2: Deployments via ALB from external Jenkins

Benefits

Running directly on AWS and leveraging the services of Netcentric for Application Operations and Infrastructure Management allows our clients to be very flexible on the infrastructure, and how we deliver web experiences for them. There is a multitude of benefits that come with AWS.

- extremely high redundancy on infrastructure

- fast resizing of AWS EC2 instances to gain more power

- snapshot based backups multiple times a day

- S3 based datastore

- built-in monitoring and log aggregation tools

- clear and transparent cost model

But these are not all the benefits. AWS has many more to offer which are related to a classic software development project.

- Web Application Firewalls (WAF)

- Developer tools such as CodeCommit, -Build, -Deploy and CodePipeline

- CloudFront as a Content Delivery Network (CDN)

Conclusion

When thinking about moving to the cloud, be aware of various factors. The provider of choice itself plays a major role, as well as possibly the location of the datacenter. If you have no in-house cloud-experienced architects and no infrastructure team, be sure to work with a partner that’s experienced in the solutions you are looking at. With AWS and its broad range of solutions, it’s possible to build a very reliable, flexible and transparent cloud infrastructure platform for your Adobe Experience Cloud products, such as AEM.